LoRA

A Logical Reasoning Augmented Dataset

for Visual Question Answering

NeurIPS 2023

Can you answer the logical questions based on the following image: "If we don't have milk, is there another dairy product that doesn't necessarily contain fat but is rich in protein and can be substituted for breakfast?" and "Is there a food in the image that is cut into a slice and is not dairy?"

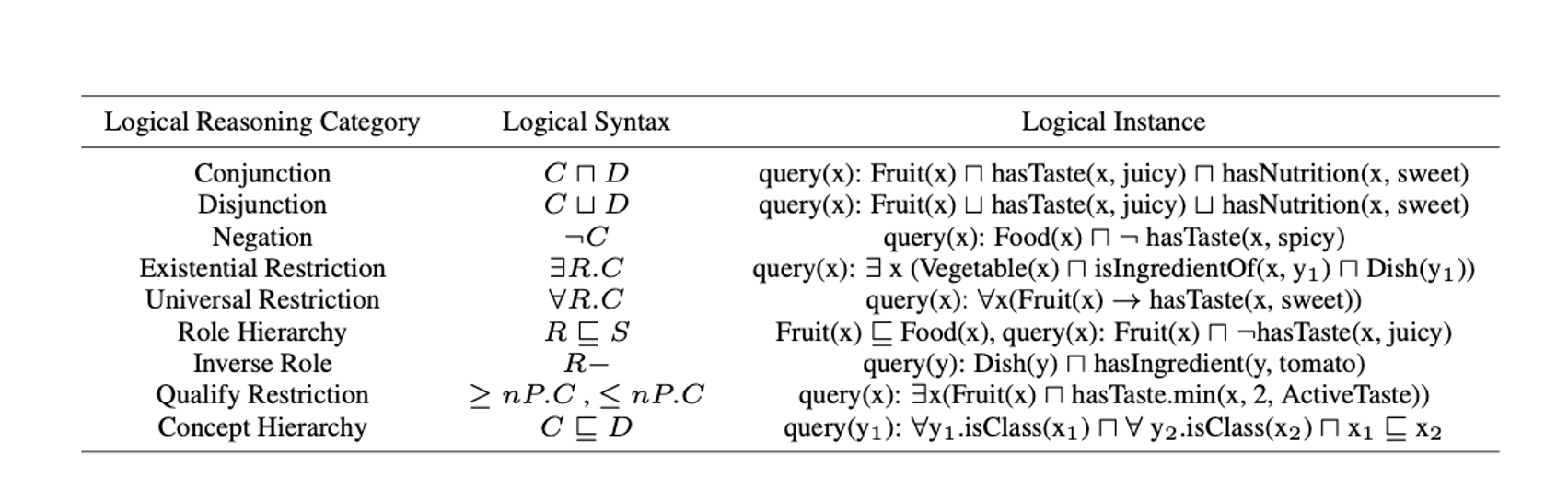

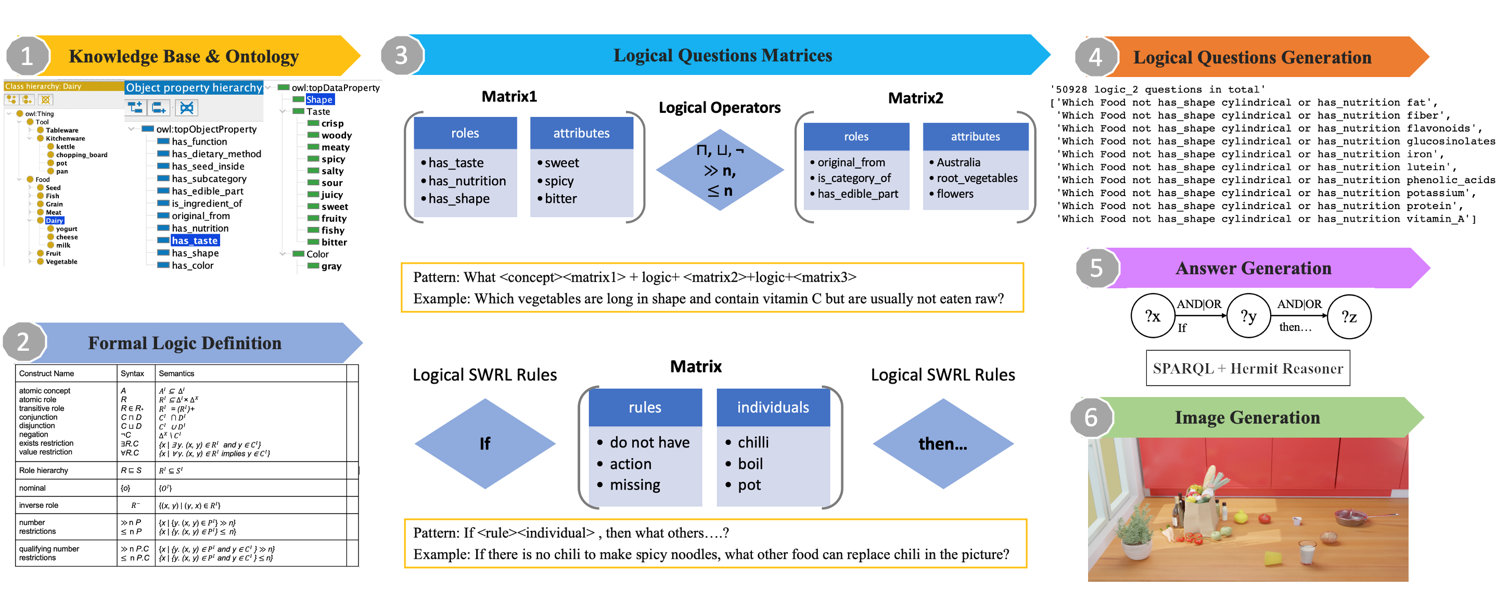

Logical reasoning is a hallmark of human cognition. Humans excel at integrating multimodal information for logical reasoning, as exemplified by the Visual Question Answering (VQA) task, which is a challenging multimodal task. Large vision-and-language models aim to tackle such reasoning problems, but evaluating the accuracy, consistency, and fabrication of the generated answers is challenging. To address this gap, we introduce LoRA, a novel Logical Reasoning Augmented VQA dataset to challenge the complex logical reasoning abilities of VQA and large vision-and-language models. All the realistic images like the one below and logical questions in LoRA are automatically generated using our algorithms.

LoRA is built with the following process:

Jingying Gao, Qi Wu, Alan Blair, Maurice Pagnucco

The 37th Conference on Neural Information Processing Systems (NeurIPS), 2023

Paper /

Code

View on the github repository.

You can download our dataset from Google drive , or check out our github repository.

If you wish to cite our work:

@inproceedings{

gao2023lora,

title={LoRA: A Logical Reasoning Augmented Dataset for Visual Question Answering},

author={Gao, Jingying and Wu, Qi and Blair, Alan and Pagnucco, Maurice},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems Datasets and Benchmarks Track},

year={2023}

}

Jingying Gao

Qi Wu

Alan Blair

Maurice Pagnucco

University of New South Wales University of Adelaide